Global Warming Policy Foundation

25 June 2014

Frank Bosse puts the spotlight on a global warming forecast published by British Met Office scientists in 2007. It appeared in Science. The peer-reviewed paper was authored by Doug M. Smith and colleagues under the title: “Improved Surface Temperature Prediction for the Coming Decade from a Global Climate Model“. Using sophisticated methods, the target of the paper was to forecast the temperature development from 2004 to 2014 while taking internal variability into account. Now that it’s 2014 and the observed data are in, we can compare to see how Smith et al did with their forecast. Boy, did they fail! –Pierre Gosselin, No Tricks Zone, 24 June 2014

By 2014, we’re predicting that we’ll be 0.3 degrees warmer than 2004. Now just to put that into context, the warming over the past century and a half has only been 0.7 degrees, globally – now there have been bigger changes locally, but globally the warming is 0.7 degrees. So 0.3 degrees, over the next ten years, is pretty significant. And half the years after 2009 are predicted to be hotter than 1998, which was the previous record. So again, these are very strong statements about what will happen over the next ten years. So again, I think this illustrates that, you know, we can already see signs of climate change, but over the next ten years, we are expecting to see quite significant changes occurring. — Dr. Vicky Pope, Met Office, 5 September 2007

1) Met Office Fiasco: Global Temperature Forecast For 2014 – A Staggering Failure – No Tricks Zone, 24 June 2014

2) Swapping Climate Models For A Roll Of The Dice – The Resilient Earth, 19 June 2014

3) Terence Corcoran: The Cost Of Climate Alarmism – Financial Post, 25 June 2014

4) And Finally: Spot The Difference – Not A Lot Of People Know That, 24 June 2014

As long as man is unable to determine with the needed precision the role natural variability plays in our observed climate, calculating the impact of greenhouse gases will remain prophecy. –Frank Bosse, Die Klate Sonne, 23 June 2014

One of the greatest failures of climate science has been the dismal performance of general circulation models (GCM) to accurately predict Earth’s future climate. For more than three decades huge predictive models, run on the biggest supercomputers available, have labored mighty and turned out garbage. –Doug L Hoffman, The Resilient Earth, 19 June 2014

Exactly how much climate alarmism and economic scaremongering will people endure before they turn off and decide to drive to the beach and otherwise have a good time, global warming or no global warming? Nobody knows, but a group of U.K. scientists said Tuesday that the tipping point may have already passed. In a report titled Time for Change? Climate Science Reconsidered group of eminent British academics from various disciplines and associated with University College London (UCL) warns that “fear appeals” may be turning the public against the climate issue as “too scary to think about.” If that’s true, U.S. President Barack Obama and his billionaire activists buddies — Tom Steyer, Michael Bloomberg and others — are on track to destroy their own campaigns. –Terence Corcoran, Financial Post, 25 June 2014

These two graphs are half-century plots of HADCRUT4 global temperatures. Both use exactly the same time and temperature scales. Which one is 1895-1945 (Nature’s fault), and which is 1963-2013 (Your fault)? –Paul Homewood, Not A Lot Of People Know That, 24 June 2014

1) Met Office Fiasco: Global Temperature Forecast For 2014 – A Staggering Failure

No Tricks Zone, 24 June 2014

Pierre Gosselin

Frank Bosse at Die kalte Sonne here puts the spotlight on a global warming forecast published by some British MetOffice scientists in 2007. It appeared in Science here.

The peer-reviewed paper was authored by Doug M. Smith and colleagues under the title: “Improved Surface Temperature Prediction for the Coming Decade from a Global Climate Model“.

Using sophisticated methods, the target of the paper was to forecast the temperature development from 2004 to 2014 while taking the internal variability into account.

The claims made in Smith’s study are loud and clear (my emphasis):

…predict further warming during the coming decade, with the year 2014 predicted to be 0.30° ± 0.21°C [5 to 95% confidence interval (CI)] warmer than the observed value for 2004. Furthermore, at least half of the years after 2009 are predicted to be warmer than 1998, the warmest year currently on record.“

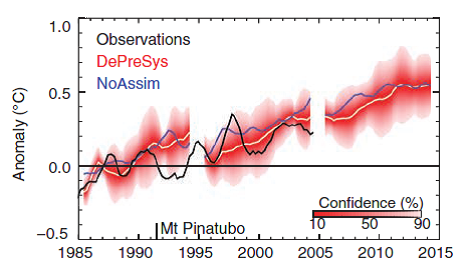

The first chart shows their forecast:

Now that it’s 2014 and the observed data are in, we can compare to see how Smith et al did with their forecast. Boy, did they fail!

The following chart shows the actual result of the Smith et al forecast, showing the real observations since 1998:

Clearly we see that the Met Office observations show a cooling of 0.014°C over the 2004-2014 decade and is below even the forecast lower confidence limit. Moreover not a single year was warmer than 1998, despite having predicted at least three would be warmer.

According to Bosse, when the 2007 chart was published it was supposed to act as another nail in the coffin for global warming skeptics. The chart was even adopted by a German report titled: “Future information for the government.” Bosse writes:

Here one reads that ‘good decadal forecasts for policymaking and economy are very useful’ (page 6) … as long as they are ignored, one might add.”

Bosse calls the chart a fiasco because it falsely advised policymaking.

2) Swapping Climate Models For A Roll Of The Dice

The Resilient Earth, 19 June 2014

Doug L Hoffman

One of the greatest failures of climate science has been the dismal performance of general circulation models (GCM) to accurately predict Earth’s future climate. For more than three decades huge predictive models, run on the biggest supercomputers available, have labored mighty and turned out garbage.

Their most obvious failure was missing the now almost eighteen year “hiatus,” the pause in temperature rise that has confounded climate alarmists and serious scientists alike. So poor has been the models’ performance that some climate scientists are calling for them to be torn down and built anew, this time using different principles. They want to adopt stochastic methods—so called Monte Carlo simulations based on probabilities and randomness—in place of today’s physics based models.

It is an open secret that computer climate models just aren’t very good. Recently scientists on the Intergovernmental Panel on Climate Change (IPCC) compared the predictions of 20 major climate models against the past six decades of climate data. According to Ben Kirtman, a climate scientist at the University of Miami in Florida and IPCC AR5 coordinating author, the results were disappointing. According to a report in Science, “the models performed well in predicting the global mean surface temperature and had some predictive value in the Atlantic Ocean, but they were virtually useless at forecasting conditions over the vast Pacific Ocean.”

Just how bad the models are can be seen in a graph that has been widely seen around the Internet. Generated by John Christy, Richard McNider, and Roy Spencer, the graph has generated more heat than global warming, with climate modeling apologists firing off rebuttal after rebuttal. Problem is, the models still suck, as you can see from the figure below.

Regardless of the warmists’ quibbles the truth is plain to see, climate models miss the mark. But then, this comes as no surprise to those who work with climate models. In the Science article, “A touch of the random,” science writer Colin Macilwain lays out the problem: “researchers have usually aimed for a deterministic solution: a single scenario for how climate will respond to inputs such as greenhouse gases, obtained through increasingly detailed and sophisticated numerical simulations. The results have been scientifically informative—but critics charge that the models have become unwieldy, hobbled by their own complexity. And no matter how complex they become, they struggle to forecast the future.”

Macliwain describes the current crop of models this way:

One key reason climate simulations are bad at forecasting is that it’s not what they were designed to do. Researchers devised them, in the main, for another purpose: exploring how different components of the system interact on a global scale. The models start by dividing the atmosphere into a huge 3D grid of boxlike elements, with horizontal edges typically 100 kilometers long and up to 1 kilometer high. Equations based on physical laws describe how variables in each box—mainly pressure, temperature, humidity, and wind speed—influence matching variables in adjacent ones. For processes that operate at scales much smaller than the grid, such as cloud formation, scientists represent typical behavior across the grid element with deterministic formulas that they have refined over many years. The equations are then solved by crunching the whole grid in a supercomputer.

It’s not that the modelers haven’t tried to improve their play toys. Over the years all sorts of new factors have been added, each adding more complexity to the calculations and hence slowing down the computation. But that is not where the real problem lies. The irreducible source of error in current models is the grid size.

Indeed, I have complained many times in this blog that the fineness of the grid is insufficient to the problem at hand. This is because many phenomena are much smaller than the grid boxes, tropical storms for instance represent huge energy transfers from the ocean surface to the upper atmosphere and can be totally missed. Other factors—things like rainfall and cloud formation—also happen at sub-grid size scales.

“The truth is that the level of detail in the models isn’t really determined by scientific constraints,” says Tim Palmer, a physicist at the University of Oxford in the United Kingdom who advocates stochastic approaches to climate modeling. “It is determined entirely by the size of the computers.”

The problem is that to halve the sized of the grid divisions requires an order-of-magnitude increase in computer power. Making the grid fine enough is just not possible with today’s technology.

In light of this insurmountable problem, some researchers go so far as to demand a major overhaul, scrapping the current crop of models altogether. Taking clues from meteorology and other sciences, the model reformers say the old physics based models should be abandoned and new models, based on stochastic methods, need to be written from the ground up. Pursuing this goal, a special issue of the Philosophical Transactions of the Royal Society A will publish 14 papers setting out a framework for stochastic climate modeling. Here is a description of the topic:

This Special Issue is based on a workshop at Oriel College Oxford in 2013 that brought together, for the first time, weather and climate modellers on the one hand and computer scientists on the other, to discuss the role of inexact and stochastic computation in weather and climate prediction. The scientific basis for inexact and stochastic computing is that the closure (or parametrisation) problem for weather and climate models is inherently stochastic. Small-scale variables in the model necessarily inherit this stochasticity. As such it is wasteful to represent these small scales with excessive precision and determinism. Inexact and stochastic computing could be used to reduce the computational costs of weather and climate simulations due to savings in power consumption and an increase in computational performance without loss of accuracy. This could in turn open the door to higher resolution simulations and hence more accurate forecasts.

In one of the papers in the special edition, “Stochastic modelling and energy-efficient computing for weather and climate prediction,” Tim Palmer, Peter Düben, and Hugh McNamara state the stochastic modeler’s case:

[A] new paradigm for solving the equations of motion of weather and climate is beginning to emerge. The basis for this paradigm is the power-law structure observed in many climate variables. This power-law structure indicates that there is no natural way to delineate variables as ‘large’ or ‘small’—in other words, there is no absolute basis for the separation in numerical models between resolved and unresolved variables.

In other words, we are going to estimate what we don’t understand and hope those pesky problems of scale just go away. “A first step towards making this division less artificial in numerical models has been the generalization of the parametrization process to include inherently stochastic representations of unresolved processes,” they state. “A knowledge of scale-dependent information content will help determine the optimal numerical precision with which the variables of a weather or climate model should be represented as a function of scale.” It should also be noted that these guys are pushing “inexact” or fuzzy computer hardware to better accommodate their ideas, but that does not change the importance of their criticism of current modeling techniques.

So what is this “stochastic computing” that is supposed to cure all of climate modeling’s ills? It is actually something quite old, often referred to as Monte Carlo simulation. In probability theory, a purely stochastic system is one whose state is non-deterministic—in other words, random. The subsequent state of the system is determined probabilistically using randomly generated numbers, the computer equivalent of throwing dice. Any system or process that must be analyzed using probability theory is stochastic at least in part. Perhaps the most famous early use was by Enrico Fermi in 1930, when he used a random method to calculate the properties of the newly discovered neutron. Nowadays, the technique is used by professionals in such widely disparate fields as finance, project management, energy, manufacturing, engineering, research and development, insurance, oil & gas, transportation, and the environment.

Monte Carlo simulation generates a range of possible outcomes and the probabilities with which they will occur. Monte Carlo techniques are quite useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and weather forecasts. Other examples include modeling phenomena with significant uncertainty in inputs, which certainly applies to climate modeling. Unlike current GCM, this approach does not seek to simulate natural, physical processes, but rather to capture the random nature of various factors and then make many simulations, called an ensemble.

Since the 1990s, ensemble forecasts have been used as routine forecasts to account for the inherent uncertainty of weather processes. This involves analyzing multiple forecasts created with an individual forecast model by using different physical parameters and/or varying the initial conditions. Such ensemble forecasts have been used to help define forecast uncertainty and to extend forecasting further into the future than otherwise possible. Still, as we all know, even the best weather forecasts are only good for five or six days before they diverge from reality.

An example can be seen in the tracking of Atlantic hurricanes. It is now common for the nightly weather forecast during hurricane season to include a probable track for a hurricane approaching the US mainland. The probable track is derived from many individual model runs.

Can stochastic models be successfully applied to climate change? Such models are based on a current state which is the starting point for generating many future forecasts. The outputs are based on randomness filtered through observed (or guessed at) probabilities. This, in theory, can account for such random events as tropical cyclones and volcanic eruptions more accurately than today’s method of just applying an average guess across all simulation cells. The probabilities are based on previous observations, which means that the simulations are only valid if the system does not change in any significant way in the future.

And here in lies the problem with shifting to stochastic simulations of climate change. It is well know that Earth’s climate system is constantly changing, creating what statisticians term nonstationary time series data. You can fit a model to previous conditions by tweaking the probabilities and inputs, but you cannot make it forecast the future because the future requires a model of something that has not taken form yet. Add to that the nature of climate according to the IPCC: “The climate system is a coupled non-linear chaotic system, and therefore the long-term prediction of future climate states is not possible.”

If such models had been constructed before the current hiatus—the 17+ year pause in rising global temperatures that nobody saw coming—they would have been at as much a loss as the current crop of GCM. You cannot accurately predict that which you have not previously experienced, measured, and parametrized, and our detailed climate data are laughingly limited. With perhaps a half century of detailed measurements, there is no possibility of constructing models that would encompass the warm and cold periods of the Holocene interglacial, let alone the events that marked the last deglaciation (or those that will mark the start of the next glacial period).

3) Terence Corcoran: The Cost Of Climate Alarmism

Financial Post, 25 June 2014

U.S. President Barack Obama and his billionaire activists buddies — Tom Steyer, Michael Bloomberg and others — are on track to destroy their own campaigns

Exactly how much climate alarmism and economic scaremongering will people endure before they turn off and decide to drive to the beach and otherwise have a good time, global warming or no global warming? Nobody knows, but a group of U.K. scientists said Tuesday that the tipping point may have already passed.

In a report titled Time for Change? Climate Science Reconsidered group of eminent British academics from various disciplines and associated with University College London (UCL) warns that “fear appeals” may be turning the public against the climate issue as “too scary to think about.”

If that’s true, U.S. President Barack Obama and his billionaire activists buddies — Tom Steyer, Michael Bloomberg and others — are on track to destroy their own campaigns.

A whole chapter of the U.K. report — chaired by UCL Professor Chris Rapley — is dedicated to “The Consequences of Fear Appeals” on public opinion. “Alarmist messages have also played a direct role in the loss of trust in the science community,” it says. “The failure of specific predictions of climate change to materialize creates the impression that the climate science community as a whole resorts to raising false alarms. When apparent failures are not adequately explained, future threats become less believable.”

All of which is apparently news to U.S. billionaire activists Bloomberg and Steyer. On Tuesday they released their own report, Risky Business: The Economic Risks of Climate Change in the United States, a national fear-induction document that — if the British scientists are right — could promote indifference to climate change issues across the United States and might even sink the issue long term.

Henry Paulson, who also co-chaired the Risky Business report with the two billionaires, wrote a related commentary in The New York Times headlined “The Coming Climate Crash.” He called it a “crisis” and warned “we fly on a collision course toward a giant mountain. We can see the crash coming…”

So far, few Americans are fastening their seatbelts. Risky Business is a catalogue of a looming economic hell in which heat, humidity, floods, fires and hurricanes will inundate the United States, preventing people from working as conditions become “literally unbearable to humans, who must maintain a skin temperature below 95 degrees F … to avoid fatal heat stroke.” No such conditions have ever existed in the States, but the report warns that such days could become routine by the end of the century and beyond.

Page after page warns of economic calamity due to the lost ability to work, sea-level rise, property loss, increased mortality, heat stroke, declining crop yields, storm surges and energy disruptions. Dollar-loss numbers run to the trillions.

Example: By 2100, up to $700-billion worth of property will be below sea levels.

Risky Business was funded by the Bloomberg Philanthropies, the Rockefellers, Hank Paulson and the TomKat Charitable Trust, the key charitable operation funded by Tom Steyer, the former investment manager who has turned killing Canada’s Keystone XL pipeline into a personal crusade.

The objective of these wealthy green activists is to bolster President Obama’s foundering climate policy agenda. The President is set to speak Wednesday night to the League of Conservation Voters.

When he addresses the conservationists, Mr. Obama will mark the anniversary of his June 25, 2013, Georgetown University climate speech, a sweaty affair in which he asked everybody to remove their jackets while he declared war on carbon dioxide as a “toxin.”

Mr. Obama will also reinforce his new anti-coal policies and revisit the alarmist warnings contained in recent U.S. government reports on the looming climate catastrophe.

Unfortunately for Mr. Obama, the American machinery of alarm faces three overwhelming obstacles. The biggest is that the alarms are not being sounded in the developing world, where they see climate change — to the degree that it is an issue — as secondary to their economic objectives.

India’s environment minister, Prakash Javadekar, said last week that growth trumps climate in India. “The issue is that … India and developing countries have right to grow,” the Times of India reported. As a result, India’s carbon emissions will increase in future and the country will not address climate change until it has eradicated poverty.

How far will U.S. voters let the Washington go on climate change before the U.S. has eradicated poverty?

But the toughest obstacle to overcome will be the climate of fear created around a science issue that is far from certain.

4) And Finally: Spot The Difference

Not A Lot Of People Know That, 24 June 2014

Paul Homewood

The above two graphs are half-century plots of HADCRUT4 global temperatures. Both use exactly the same time and temperature scales.

Which one is 1895-1945 (Nature’s fault), and which is 1963-2013 (Your fault)?